[Draft article to be published in the January/February 2025 (Volume 37, Issue 1) of Assessment Update]

Data Science for Assessment

David Eubanks and Scott Moore

Introduction

Assessment and institutional research offices have too much data and too little time. Standard reporting often crowds out opportunities for innovative research. It's like being stuck in a leaking rowboat—bailing water just to stay afloat. Fortunately, advancements in data science now offer a clear solution. It is equal parts technique and philosophy. The first and easiest step is to modernize our data work.

Tell, Don’t Do

Transactional tools like Excel require manual interaction for each operation, such as renaming a column or deleting a row, which must be repeated each time the task is performed. Such row and column operations are the building blocks of reports, and a large report can require hundreds of these. This makes report reproduction slow and error-prone. The solution is to use a descriptive tool instead. We can speed up the production of reports by writing them only once and then using that template to automatically produce future ones.

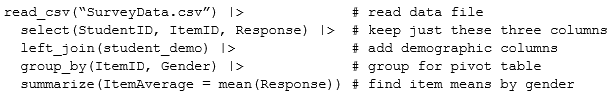

A descriptive approach involves using a set of written instructions to perform these tasks. There are many choices for a scripting language to do that, but we recommend R and the tidyverse package, which is intuitive to use, quick to learn, and free. An example is shown below with comments on the right.

The example shows a typical step in a report on survey data. It is read top to bottom, with the “|>” at the end of each line “piping” the results of one step to the next, like an assembly line. (Anything to the right of the hash “#” is a comment.) The short script describes reading in a data file, selecting the columns we want to work with, joining it to associated student information, and creating a pivot table by survey item and student gender to compute item averages. We could just as easily compute item distributions. With a little extra effort, we could plot them as histograms or run a regression analysis.

Once a script is written and tested, it runs nearly instantaneously. That means the next time we want to run the report, we can spend time improving it by adding new features instead of redoing all the same work as we would with a transactional tool like Excel. This unlocks the superpower of iteration, which is essential to doing science. As a bonus, it creates a transparent and reproducible workflow for data analysis, so when we inevitably have validity questions, we can trace every stage of data manipulation to look for errors.

To learn enough of this descriptive language to be productive takes a couple of months for most people, in our experience. The resulting time savings allows more data operations to be automated, creating a virtuous cycle of freeing up time and learning more advanced features of the language. The result is a transformation of how we work. It’s not just a matter of producing reports a hundred times more quickly; the iteration superpower unlocks the freedom to try out ideas, including ideas from the broader research community. This powerful combination of features allows us to become data scientists instead of bilge pumps. Which leads to the philosophical transformation: what do we do with these superpowers?

Think Again

Imagine this scenario: a vice president at your institution reads a report showing that learning outcomes averages are lower for commuter students than for residents. She thinks this is because commuters have fewer social opportunities and convinces the cabinet to budget for new programming for those students. Two years later, the data shows no change. Still, the vice president thinks something’s probably wrong with the data and finds some anecdotes to advertise the value of the initiative. What, if anything, has gone wrong here?

Data analysis often serves to (1) determine what is happening, (2) make an educated guess about cause and effect, and (3) recommend actions to improve the situation. You’ll recognize that list as a rephrased assessment cycle. But from the perspective of report writing, we can lose sight of the grandeur of the project: The goal of our work is to understand reality well enough to affect change.

History shows that understanding reality accurately is not an easy task. As detailed in Steven Shapin’s The Scientific Revolution, moving to a more accurate understanding of reality means changing our minds about what is real, which is difficult for anyone but especially for those with authority and a reputation to protect.

Data science is big business, and Benn Stancil is an insider who shares his thoughts on the state of the industry at benn.substack.com. In one piece he writes about the role of analysts through the lens of Daniel Kahneman’s framework of System 1 / System 2 thinking. System 1 represents our instinctive, automatic thinking, while System 2 involves deliberate, analytical reasoning. Most data analysts produce System 2-oriented products: charts and tables of numbers, perhaps with some inference about meaning. Stancil argues that what we should be doing instead is trying to affect System 1 thinking: the fundamental ways we assume the world is organized.

In the hypothetical example above, the vice president concluded from the initial report that commuters were underperforming academically, but the why question was left unanswered. That epistemological vacuum invites an explanation from the latent System 1 system in place (“commuter students do worse because they don’t benefit from the residential student experience”). The problem is that by leaving out an analysis of causes, we surrender to existing System 1 models of the world and have no lever to move those pre-existing beliefs. If the original report had included explanatory variables, it might have concluded that the commuter population had a different socio-economic profile and that this might be the cause of the gap. However, once the institution has committed resources and reputation to a project based on an assumption, the opportunity to challenge that assumption is likely lost. Motivated reasoning can result in new information being ignored if it contradicts the desired outcome. This phenomenon is detailed in books like Adam Grant’s Think Again or Julia Galef’s The Scout Mindset. Practically, it’s important to cultivate relationships with campus leaders to understand the existing System 1 beliefs and anticipate opportunities to update them.

Analyzing causes is fraught with problems, which is why science proceeds slowly, and sometimes backtracks. It’s imperative that a data scientist be part of a larger research community to share and test new ideas about causal relationships.

Implications for Assessment Offices

Much current assessment work does not yet incorporate data science techniques, which presents an opportunity for specialization. An office may have multiple staff working with faculty, and could add a data scientist role, either within the office or in institutional research. Directors should know this possibility exists and to be aware of how to train and hire for it. The technical skill needed is the ability to learn a scripting language like R/tidyverse and enough statistics to get started. This is not a high bar. You can begin by identifying a routine report that could be automated and start learning how a tool like R can transform that process. The initial investment in time will pay off in freeing up resources for higher-level analysis.

For directors, the role might include the technical part of data science, but it need not. More important is the leadership role of understanding the System 1 / System 2 distinction as we’ve described it here, and to develop the relationships that facilitate the updating of beliefs. This is a big responsibility, as we never have perfect data, identifying causes is virtually impossible in educational research, and leaders are often reluctant to change their minds. We must be modest in our claims yet forceful enough to align System 1 thinking with the data. To make the task tractable, it’s essential to participate in the larger research community; none of us can do all the work ourselves, and none of us has a monopoly on good ideas.

Modernizing data science practices significantly enhances the value of assessment offices in addressing higher education's pressing challenges. This leads to greater demand for the services of the IR/assessment office and increased influence in driving changes that benefit students.

David Eubanks, Assistant Vice President for Assessment and Institutional Effectiveness, Furman University; and Scott Moore, Independent Consultant for Leaders in Higher Education, Palmetto Insights.